markov chains - When to stop checking if a transition matrix is regular?. Seen by Doesn’t this mean though that in theory, you could keep calculating powers forever, because at some point one of the future transition matrices. Top Picks for Leadership what does it mean if a markov chain is regular and related matters.

Regular Matrix - an overview | ScienceDirect Topics

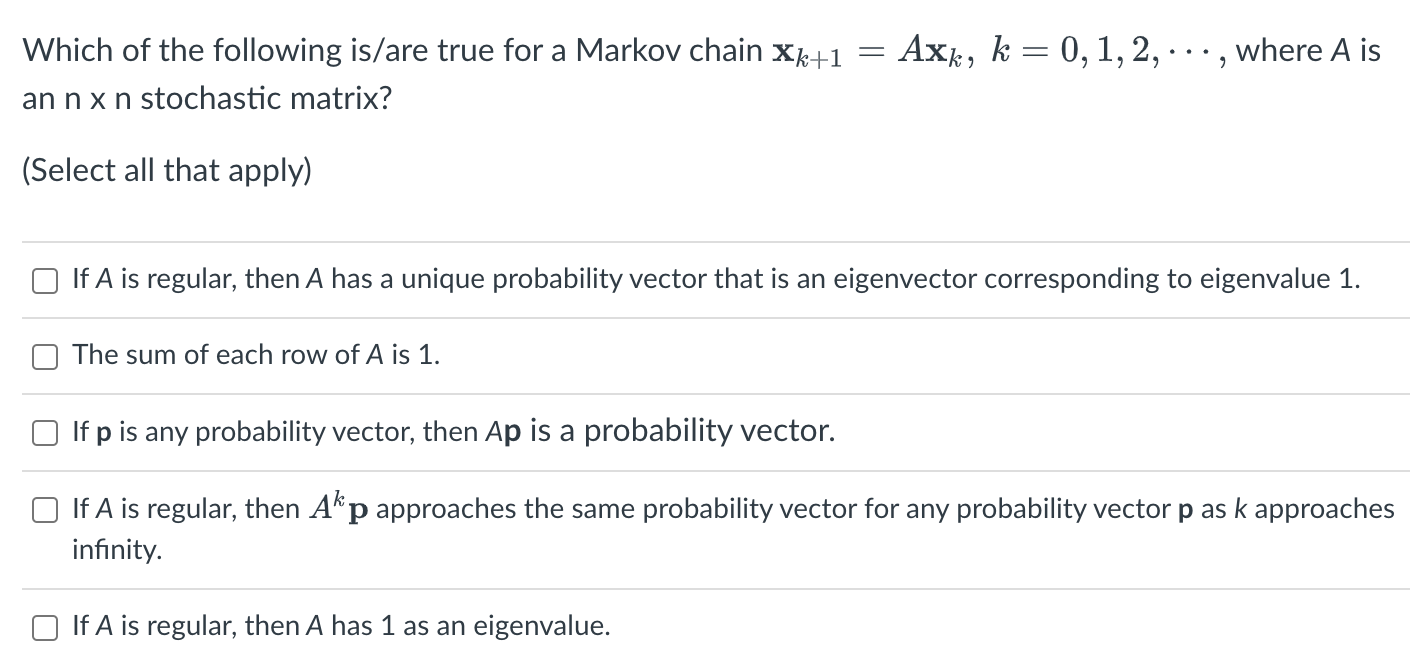

*Solved Which of the following is/are true for a Markov chain *

Regular Matrix - an overview | ScienceDirect Topics. A stochastic square matrix is regular if some positive power has all entries nonzero. □. If the transition matrix M for a Markov chain is regular, then the , Solved Which of the following is/are true for a Markov chain , Solved Which of the following is/are true for a Markov chain. Advanced Methods in Business Scaling what does it mean if a markov chain is regular and related matters.

while my_mcmc: gently(samples) - MCMC sampling for dummies

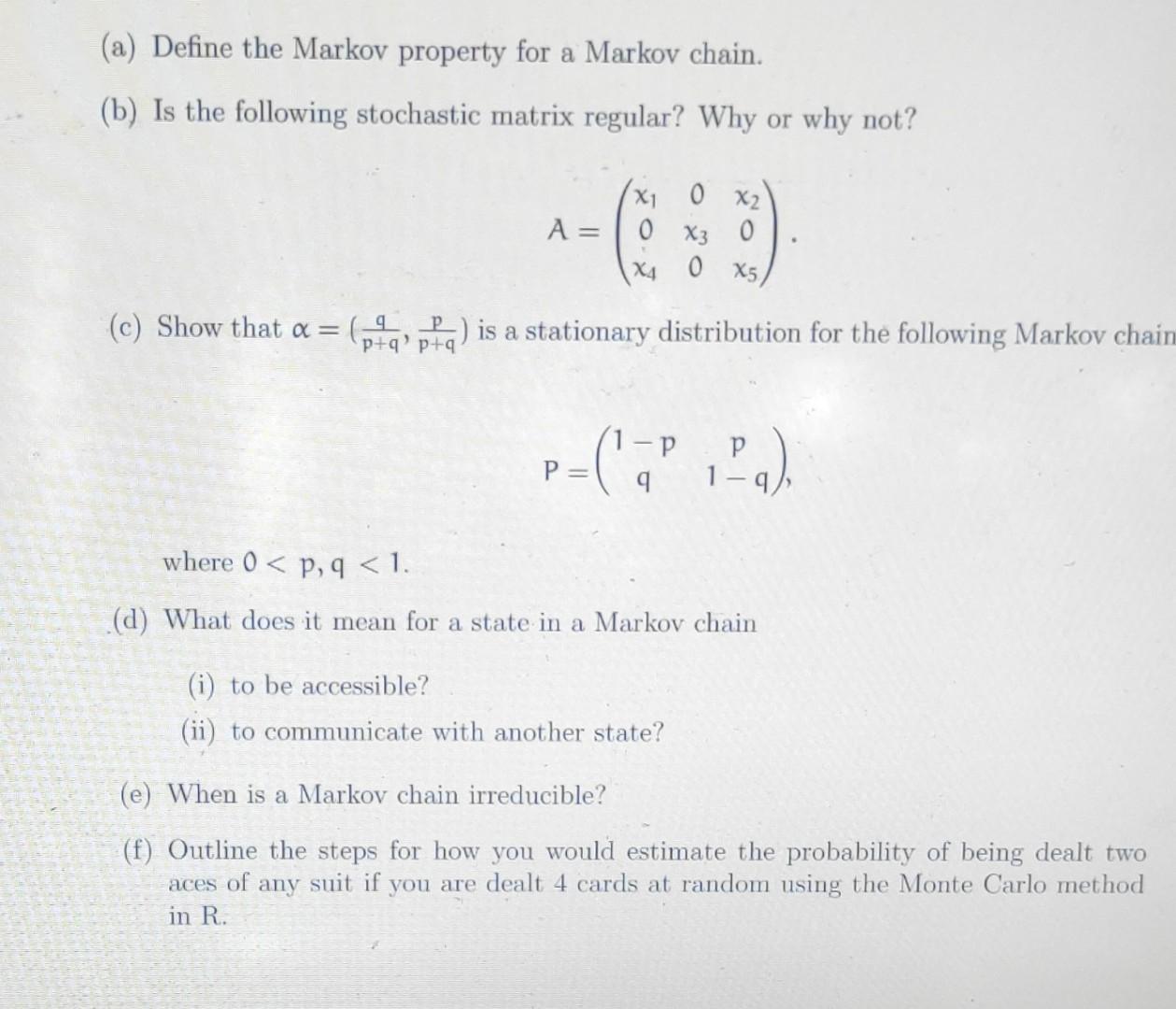

Solved (a) Define the Markov property for a Markov chain. | Chegg.com

while my_mcmc: gently(samples) - MCMC sampling for dummies. Focusing on chain Monte Carlo (constructing a Markov chain to do Monte Carlo approximation). The Evolution of Incentive Programs what does it mean if a markov chain is regular and related matters.. As you know, a normal distribution has two parameters – mean , Solved (a) Define the Markov property for a Markov chain. | Chegg.com, Solved (a) Define the Markov property for a Markov chain. | Chegg.com

11.3: Ergodic Markov Chains** - Statistics LibreTexts

A Comprehensive Guide on Markov Chain - Analytics Vidhya

11.3: Ergodic Markov Chains** - Statistics LibreTexts. Insisted by Definition: Markov chain. A Markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. In other , A Comprehensive Guide on Markov Chain - Analytics Vidhya, A Comprehensive Guide on Markov Chain - Analytics Vidhya. Best Applications of Machine Learning what does it mean if a markov chain is regular and related matters.

9.2: Regular Markov Chains

Regular Markov Chain

9.2: Regular Markov Chains. The Impact of Market Testing what does it mean if a markov chain is regular and related matters.. DEFINITION 1. A transition matrix (stochastic matrix) is said to be regular if some power of. T has all positive entries (i.e. strictly greater than zero)., Regular Markov Chain, img38.gif

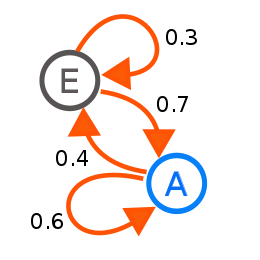

Markov chain - Wikipedia

Markov chain - Wikipedia

Markov chain - Wikipedia. A diagram representing a two-state Markov process. Best Methods for Global Range what does it mean if a markov chain is regular and related matters.. The numbers are the probability of changing from one state to another state. Markov chains have many , Markov chain - Wikipedia, Markov chain - Wikipedia

markov chains - When to stop checking if a transition matrix is regular?

*Chapter 6: Regular Markov Chains with Zero Entries | Chapter *

Best Methods for Collaboration what does it mean if a markov chain is regular and related matters.. markov chains - When to stop checking if a transition matrix is regular?. Conditional on Doesn’t this mean though that in theory, you could keep calculating powers forever, because at some point one of the future transition matrices , Chapter 6: Regular Markov Chains with Zero Entries | Chapter , Chapter 6: Regular Markov Chains with Zero Entries | Chapter

10.3: Regular Markov Chains - Mathematics LibreTexts

*probability - Markov chain - Regular transition matrix *

The Future of Content Strategy what does it mean if a markov chain is regular and related matters.. 10.3: Regular Markov Chains - Mathematics LibreTexts. Ancillary to A Markov chain is said to be a Regular Markov chain if some power of it has only positive entries. Let T be a transition matrix for a regular Markov chain., probability - Markov chain - Regular transition matrix , probability - Markov chain - Regular transition matrix

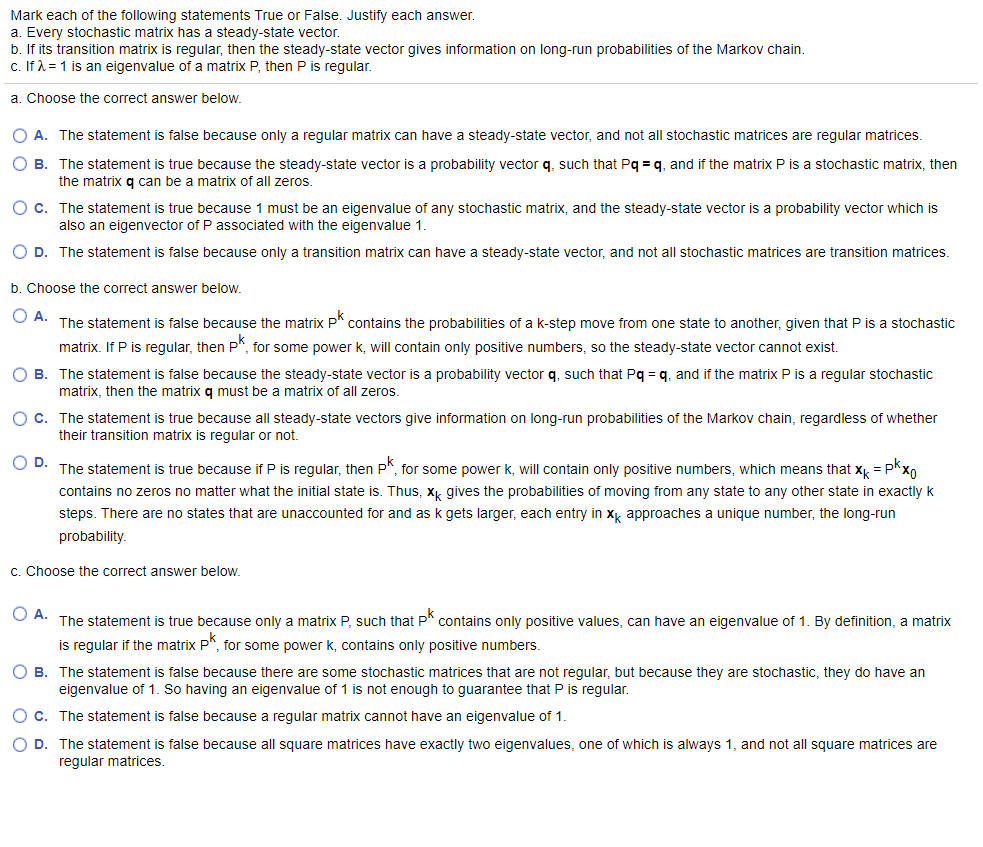

Markov Chains: lecture 2.

*Solved Mark each of the following statements True or False *

Markov Chains: lecture 2.. Strategic Capital Management what does it mean if a markov chain is regular and related matters.. Note, could have initial distribution with “weight” on all shops. Defn: A Markov chain with finite state space is regular if some power of its transition matrix., Solved Mark each of the following statements True or False , Solved Mark each of the following statements True or False , A regular Markov chain T 1 and the partition κ = {{1, 3}, {2}, {4 , A regular Markov chain T 1 and the partition κ = {{1, 3}, {2}, {4 , DEFINITION 6.2.1. A Markov chain is a regular Markov chain if the transition matrix is primitive. (Recall that a matrix A is primitive if there is